- foreword

- 1. What is VOSK?

- 2. Use steps

- 1. Environmental preparation

- 2. maven dependencies

- If the import fails, you can download the jar and build the path;

- 3. Language Model

- 4. Run the code

- Summarize

- Easy Way to Learn Speech Recognition in Java With a Speech-To-Text API

- Asynchronous Rev AI API Java Code Example

- Streaming Rev AI API Java Code Example

- What is the Best Speech Recognition API for Java?

- Labelled Data and Machine Learning

foreword

At present, the mainstream speech recognition manufacturers include iFLYTEK, Baidu, Google, etc., but on their official website, it is found that not many supports the offline version of java. The iFLYTEK offline package is only based on Android, and Baidu officially does not have an offline version. , so VOSK and CMU Sphinx were screened out in the resource search, and both of them are open source, but there is no Chinese model in the official website of CMU Sphinx, so VOSK was selected for selection.

1. What is VOSK?

Vosk is a speech recognition toolkit. The best things about Vosk are:

1. Twenty+ languages supported – Chinese, English, Indian English, German, French, Spanish, Portuguese, Russian, Turkish, Vietnamese, Italian, Dutch, Catalan, Arabic, Greek English, Persian, Filipino, Ukrainian, Kazakh, Swedish, Japanese, Esperanto

2. Works offline on mobile devices – Raspberry Pi, Android, iOS

3. Install using a simple pip3 install vosk

4. Portable models are only 50Mb per language, but larger server models are available

Provides streaming API for best user experience (unlike popular speech recognition python packages)

5. There are also wrappers for different programming languages - java/csharp/javascript etc.

6. The vocabulary can be quickly reconfigured for optimal accuracy

7. Support speaker recognition

2. Use steps

1. Environmental preparation

Because the bottom layer of this resource is developed by c, you need to download vcredist;

2. maven dependencies

The code is as follows (example):

net.java.dev.jna jna 5.7.0 com.alphacephei vosk 0.3.32 If the import fails, you can download the jar and build the path;

3. Language Model

Download the language model you want to use from the official website https://alphacephei.com/vosk/models. If it is Chinese, it is recommended: vosk-model-small-cn-0.3

4. Run the code

Add the officially given demo to the project, and what needs to be changed is the given DecoderDemo, this is the calling method,

public static void main(String[] argv) throws IOException, UnsupportedAudioFileException < LibVosk.setLogLevel(LogLevel.DEBUG); try (Model model = new Model("model");//This segment is the model address InputStream ais = AudioSystem.getAudioInputStream(new BufferedInputStream(new FileInputStream("cn.wav")));//This segment is the language file to be transferred, only wav is supported Recognizer recognizer = new Recognizer(model, 12000)) = 0) < if (recognizer.acceptWaveForm(b, nbytes)) < System.out.println(recognizer.getResult()); >else < System.out.println(recognizer.getPartialResult()); >> System.out.println(recognizer.getFinalResult()); > >Summarize

The biggest disadvantage of this open source is that it is a bit slow. If you have suitable open source resources, remember to recommend them.

Easy Way to Learn Speech Recognition in Java With a Speech-To-Text API

Here we explain show how to use a speech-to-text API with two Java examples.

We will be using the Rev AI API (free for your first 5 hours) that has two different speech-to-text API’s:

Asynchronous Rev AI API Java Code Example

We will use the Rev AI Java SDK located here. We use this short audio, on the exciting topic of HR recruiting.

First, sign up for Rev AI for free and get an access token.

Create a Java project with whatever editor you normally use. Then add this dependency to the Maven pom.xml manifest:

4.0.0 org.example Rev2 1.0-SNAPSHOT ai.rev.speechtotext revai-java-sdk-speechtotext 1.3.0 11 11 The code sample below is here. We explain it and show the output.

Submit the job from a URL:

submittedJob = apiClient.submitJobUrl(mediaUrl, revAiJobOptions); Most of the Rev AI options are self-explanatory, for the most part. You can use the callback to kick off downloading the transcription in another program that is on standby, listening on http, if you don’t want to use the polling method we use in this example.

RevAiJobOptions revAiJobOptions = new RevAiJobOptions(); revAiJobOptions.setCustomVocabularies(Arrays.asList(customVocabulary)); revAiJobOptions.setMetadata("My first submission"); revAiJobOptions.setCallbackUrl("https://example.com"); revAiJobOptions.setSkipPunctuation(false); revAiJobOptions.setSkipDiarization(false); revAiJobOptions.setFilterProfanity(true); revAiJobOptions.setRemoveDisfluencies(true); revAiJobOptions.setSpeakerChannelsCount(null); revAiJobOptions.setDeleteAfterSeconds(2592000); // 30 days in seconds revAiJobOptions.setLanguage("en"); Put the program in a loop and check the job status. Download the transcription when it is done.

RevAiJobStatus retrievedJobStatus = retrievedJob.getJobStatus();The SDK returns captions as well as text.

srtCaptions = apiClient.getCaptions(jobId, RevAiCaptionType.SRT); vttCaptions = apiClient.getCaptions(jobId, RevAiCaptionType.VTT);Here is the complete code:

package ai.rev.speechtotext; import ai.rev.speechtotext.models.asynchronous.RevAiCaptionType; import ai.rev.speechtotext.models.asynchronous.RevAiJob; import ai.rev.speechtotext.models.asynchronous.RevAiJobOptions; import ai.rev.speechtotext.models.asynchronous.RevAiJobStatus; import ai.rev.speechtotext.models.asynchronous.RevAiTranscript; import ai.rev.speechtotext.models.vocabulary.CustomVocabulary; import java.io.IOException; import java.io.InputStream; import java.util.Arrays; public class AsyncTranscribeMediaUrl < public static void main(String[] args) < // Assign your access token to a String String accessToken = "your_access_token"; // Initialize the ApiClient with your access token ApiClient apiClient = new ApiClient(accessToken); // Create a custom vocabulary for your submission CustomVocabulary customVocabulary = new CustomVocabulary(Arrays.asList("Robert Berwick", "Noam Chomsky", "Evelina Fedorenko")); // Initialize the RevAiJobOptions object and assign RevAiJobOptions revAiJobOptions = new RevAiJobOptions(); revAiJobOptions.setCustomVocabularies(Arrays.asList(customVocabulary)); revAiJobOptions.setMetadata("My first submission"); revAiJobOptions.setCallbackUrl("https://example.com"); revAiJobOptions.setSkipPunctuation(false); revAiJobOptions.setSkipDiarization(false); revAiJobOptions.setFilterProfanity(true); revAiJobOptions.setRemoveDisfluencies(true); revAiJobOptions.setSpeakerChannelsCount(null); revAiJobOptions.setDeleteAfterSeconds(2592000); // 30 days in seconds revAiJobOptions.setLanguage("en"); RevAiJob submittedJob; String mediaUrl = "https://www.rev.ai/FTC_Sample_1.mp3"; try < // Submit the local file and transcription options submittedJob = apiClient.submitJobUrl(mediaUrl, revAiJobOptions); >catch (IOException e) < throw new RuntimeException("Failed to submit url [" + mediaUrl + "] " + e.getMessage()); >String jobId = submittedJob.getJobId(); System.out.println("Job Id: " + jobId); System.out.println("Job Status: " + submittedJob.getJobStatus()); System.out.println("Created On: " + submittedJob.getCreatedOn()); // Waits 5 seconds between each status check to see if job is complete boolean isJobComplete = false; while (!isJobComplete) < RevAiJob retrievedJob; try < retrievedJob = apiClient.getJobDetails(jobId); >catch (IOException e) < throw new RuntimeException("Failed to retrieve job [" + jobId + "] " + e.getMessage()); >RevAiJobStatus retrievedJobStatus = retrievedJob.getJobStatus(); if (retrievedJobStatus == RevAiJobStatus.TRANSCRIBED || retrievedJobStatus == RevAiJobStatus.FAILED) < isJobComplete = true; >else < try < Thread.sleep(5000); >catch (InterruptedException e) < e.printStackTrace(); >> > // Get the transcript and caption outputs RevAiTranscript objectTranscript; String textTranscript; InputStream srtCaptions; InputStream vttCaptions; try < objectTranscript = apiClient.getTranscriptObject(jobId); textTranscript = apiClient.getTranscriptText(jobId); srtCaptions = apiClient.getCaptions(jobId, RevAiCaptionType.SRT); vttCaptions = apiClient.getCaptions(jobId, RevAiCaptionType.VTT); >catch (IOException e) < e.printStackTrace(); >> > Job Id: hzNOmTjrPfzB Job Status: Created On: 2021-03-16T08:12:14.202Z Process finished with exit code 0You can get the transcript with Java.

textTranscript = apiClient.getTranscriptText(jobId);Or go get it later with curl, noting the job id from stdout above.

curl -X GET "https://api.rev.ai/speechtotext/v1/jobs//transcript" -H "Authorization: Bearer $REV_ACCESS_TOKEN" -H "Accept: application/vnd.rev.transcript.v1.0+json"This returns the transcription in JSON format:

Streaming Rev AI API Java Code Example

A stream is a websocket connection from your video or audio server to the Rev AI audio-to-text entire.

We can emulate this connection by streaming a .raw file from the local hard drive to Rev AI.

Download the audio then convert it to .raw format as shown below. Converted it from wav to raw with the following ffmpeg command:

ffmpeg -i Harvard_list_01.wav -f f32le -acodec pcm_f32le Harvard_list_01.rawAs you run that is gives key information about the audio file:

Input #0, wav, from 'Harvard_list_01.wav': Metadata: encoder : Adobe Audition CC 2017.1 (Windows) date : 2018-12-20 creation_time : 15:58:57 time_reference : 0 Duration: 00:01:07.67, bitrate: 1536 kb/s Stream #0:0: Audio: pcm_f32le ([3][0][0][0] / 0x0003), 48000 Hz, 1 channels, flt, 1536 kb/s Output #0, f32le, to 'Harvard_list_01.raw': Metadata: time_reference : 0 date : 2018-12-20 encoder : Lavf56.40.101 Stream #0:0: Audio: pcm_f32le, 48000 Hz, mono, flt, 1536 kb/s Metadata: encoder : Lavc56.60.100 pcm_f32le Stream mapping:To explain, first we set a websocket connection and start streaming the file:

// Initialize your WebSocket listener WebSocketListener webSocketListener = new WebSocketListener(); // Begin the streaming session streamingClient.connect(webSocketListener, streamContentType, sessionConfig);The important items to set here are the sampling rate (not bit rate) and format. We match this information from ffmpeg: Audio: pcm_f32le, 48000 Hz,

StreamContentType streamContentType = new StreamContentType(); streamContentType.setContentType("audio/x-raw"); // audio content type streamContentType.setLayout("interleaved"); // layout streamContentType.setFormat("F32LE"); // format streamContentType.setRate(48000); // sample rate streamContentType.setChannels(1); // channelsAfter the client connects, the onConnected event sends a message. We can get the jobid from there. This will let us download the transcription later if we don’t want to get it in real-time.

To get the transcription in real time, listen for the onHypothesis event:

@Override public void onHypothesis(Hypothesis hypothesis) < if (hypothesis.getType() == MessageType.FINAL) < String textValue = ""; for (Element element: hypothesis.getElements()) < textValue = textValue + element.getValue(); >System.out.println(" text transcribed " + textValue); > Here is the complete code:

package com.example.stream; import ai.rev.speechtotext.models.streaming.ConnectedMessage; import ai.rev.speechtotext.models.streaming.Hypothesis; import ai.rev.speechtotext.models.streaming.SessionConfig; import ai.rev.speechtotext.models.streaming.StreamContentType; import ai.rev.speechtotext.StreamingClient; import ai.rev.speechtotext.RevAiWebSocketListener; import ai.rev.speechtotext.models.streaming.MessageType; import ai.rev.speechtotext.models.asynchronous.Element; import okhttp3.Response; import okio.ByteString; import java.io.BufferedInputStream; import java.io.DataInputStream; import java.io.File; import java.io.FileInputStream; import java.io.IOException; import java.nio.ByteBuffer; import java.util.Arrays; public class StreamingFromLocalFile < public static void main(String[] args) throws InterruptedException < // Assign your access token to a String String accessToken = "xxxxx"; // Configure the streaming content type StreamContentType streamContentType = new StreamContentType(); streamContentType.setContentType("audio/x-raw"); // audio content type streamContentType.setLayout("interleaved"); // layout streamContentType.setFormat("F32LE"); // format streamContentType.setRate(48000); // sample rate streamContentType.setChannels(1); // channels // Setup the SessionConfig with any optional parameters SessionConfig sessionConfig = new SessionConfig(); sessionConfig.setMetaData("Streaming from the Java SDK"); sessionConfig.setFilterProfanity(true); sessionConfig.setRemoveDisfluencies(true); sessionConfig.setDeleteAfterSeconds(2592000); // 30 days in seconds // Initialize your client with your access token StreamingClient streamingClient = new StreamingClient(accessToken); // Initialize your WebSocket listener WebSocketListener webSocketListener = new WebSocketListener(); // Begin the streaming session streamingClient.connect(webSocketListener, streamContentType, sessionConfig); // Read file from disk File file = new File("/Users/walkerrowe/Downloads/Harvard_list_01.raw"); // Convert file into byte array byte[] fileByteArray = new byte[(int) file.length()]; try (final FileInputStream fileInputStream = new FileInputStream(file)) < BufferedInputStream bufferedInputStream = new BufferedInputStream(fileInputStream); try (final DataInputStream dataInputStream = new DataInputStream(bufferedInputStream)) < dataInputStream.readFully(fileByteArray, 0, fileByteArray.length); >catch (IOException e) < throw new RuntimeException(e.getMessage()); >> catch (IOException e) < throw new RuntimeException(e.getMessage()); >// Set the number of bytes to send in each message int chunk = 8000; // Stream the file in the configured chunk size for (int start = 0; start < fileByteArray.length; start += chunk) < streamingClient.sendAudioData( ByteString.of( ByteBuffer.wrap( Arrays.copyOfRange( fileByteArray, start, Math.min(fileByteArray.length, start + chunk))))); >// Wait to make sure all responses are received Thread.sleep(5000); // Close the WebSocket streamingClient.close(); > // Your WebSocket listener for all streaming responses private static class WebSocketListener implements RevAiWebSocketListener < @Override public void onConnected(ConnectedMessage message) < System.out.println(message); >@Override public void onHypothesis(Hypothesis hypothesis) < if (hypothesis.getType() == MessageType.FINAL) < String textValue = ""; for (Element element: hypothesis.getElements()) < textValue = textValue + element.getValue(); >System.out.println(" text transcribed " + textValue); > // unrem this for log messages // System.out.println(hypothesis.toString()); > @Override public void onError(Throwable t, Response response) < System.out.println(response); >@Override public void onClose(int code, String reason) < System.out.println(reason); >@Override public void onOpen(Response response) < System.out.println(response.toString()); >> > Here is what the output looks like:

Response ', > text transcribed Okay. text transcribed List number one. text transcribed The Birch canoe slid on the smooth planks. text transcribed Do the sheet to the dark blue background. text transcribed It's easy to tell the depth of a well. text transcribed These days, a chicken leg is a rare dish. text transcribed Rice is often served in round bowls. text transcribed The juice of lemons makes fine. Punch. text transcribed The box was thrown beside the parked truck. text transcribed The Hawks were fed chopped corn and garbage. text transcribed Four hours of steady work face. Does. text transcribed Large size and stockings is hard to sell. End of input. Closing What is the Best Speech Recognition API for Java?

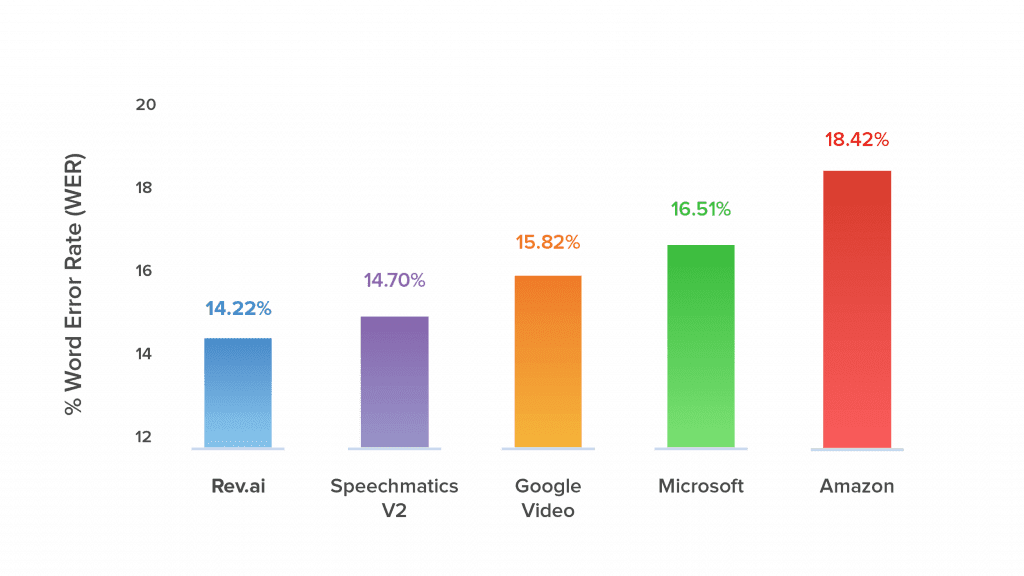

Accuracy is what you want in a speech-to-text API, and Rev AI is a one-of-a-kind speech-to-text API in that regard.

You might ask, “So what? Siri and Alexa already do speech-to-text, and Google has a speech cloud API.”

That’s true. But there’s one game-changing difference:

The data that powers Rev AI is manually collected and carefully edited. Rev pays 50,000 freelancers to transcribe audio & caption videos for its 99% accurate transcription & captioning services. Rev AI is trained with this human-sourced data, and this produces transcripts that are far more accurate than those compiled simply by collecting audio, as Siri and Alexa do.

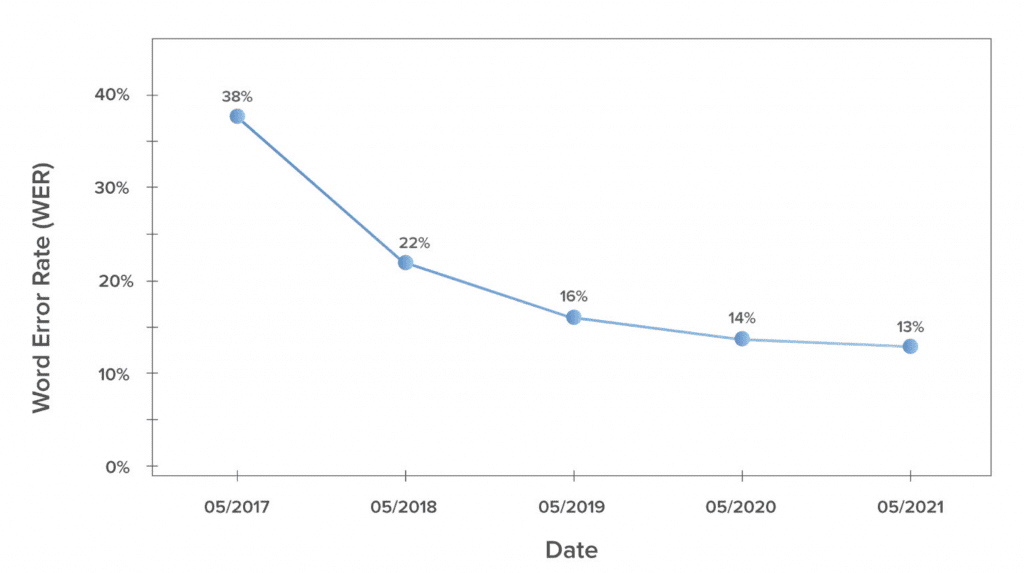

Rev AI’s accuracy is also snowballing, in a sense. Rev’s speech recognition system and API is constantly improving its accuracy rates as its dataset grows and the world-class engineers constantly improve the product.

Labelled Data and Machine Learning

Why is human transcription important?

If you are familiar with machine learning then you know that converting audio to text is a classification problem.

To train the computer to transcribe audio ML programmers feed feature-label data into their model. This data is called a training set.

Features (sound) are input and labels (the corresponding letter) are output, calculated by the classification algorithm.

Alexa and Siri vacuum up this data all day long. So you would think they would have the largest and therefore most accurate training data.

But that’s only half of the equation. It takes many hours of manual work to type in the labels that correspond to the audio. In other words, a human must listen to the audio and type the corresponding letter and word.

This is what Rev AI has done.

It’s a business model that has taken off, because it fills a very specific need.

For example, look at closed captioning on YouTube. YouTube can automatically add captions to it’s audio. But it’s not always clear. You will notice that some of what it says is nonsense. It’s just like Google Translate: it works most of the time, but not all of the time.

The giant tech companies use statistical analysis, like the frequency distribution of words, to help their models.

But they are consistently outperformed by manually trained audio-to-voice training models.