Linear Regression using Stochastic Gradient Descent in Python

In today’s tutorial, we will learn about the basic concept of another iterative optimization algorithm called the stochastic gradient descent and how to implement the process from scratch. You will also see some benefits and drawbacks behind the algorithm.

If you aren’t aware of the gradient descent algorithm, please see the most recent tutorial before you continue reading.

The Concept behind Stochastic Descent Descent

One of the significant downsides behind the gradient descent algorithm is when you are working with a lot of samples. You might be wondering why this poses a problem.

The reason behind this is because we need to compute first the sum of squared residuals on as many samples, multiplied by the number of features. Then compute the derivative of this function for each of the features within our dataset. This will undoubtedly require a lot of computation power if your training set is significantly large and when you want better results.

Considering that gradient descent is a repetitive algorithm, this needs to be processed for as many iterations as specified. That’s dreadful , isn’t it? Instead of relying on gradient descent for each example, an easy solution is to have a different approach. This approach would be computing the loss/cost over a small random sample of the training data, calculating the derivative of that sample, and assuming that the derivative is the best direction to make gradient descent.

Along with this, as opposed to selecting a random data point, we pick a random ordering over the information and afterward walk conclusively through them. This advantageous variant of gradient descent is called stochastic gradient descent.

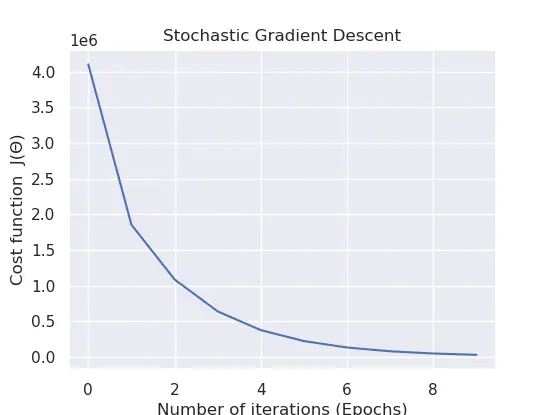

In some cases, it may not go in the optimal direction, which could affect the loss/cost negatively. Nevertheless, this can be taken care of by running the algorithm repetitively and by taking little actions as we iterate.

Applying Stochastic Gradient Descent with Python

Now that we understand the essentials concept behind stochastic gradient descent let’s implement this in Python on a randomized data sample.

Open a brand-new file, name it linear_regression_sgd.py, and insert the following code: