- How to Send Multiple Concurrent Requests in Python

- send multiple HTTP GET requests

- concurrent — multiple HTTP requests at once

- asyncio & aiohttp — send multiple requests

- send multiple requests — proxy + file output

- summary

- Multi-threading API Requests in Python

- Classic Single Threaded Code

- Multi Threaded Code

How to Send Multiple Concurrent Requests in Python

To send multiple parallel HTTP requests in Python, we can use the requests library.

send multiple HTTP GET requests

Let’s start by example of sending multiple HTTP GET requests in Python:

import requests urls = ['https://example.com/', 'https://httpbin.org/', 'https://example.com/test_page'] for url in urls: response = requests.get(url) print(response.status_code) We can find the status of each request below:

concurrent — multiple HTTP requests at once

To send multiple HTTP requests in parallel we can use Python libraries like:

The easiest to use is the concurrent library.

Example of sending multiple GET requests with a concurrent library. We create a pool of worker threads or processes. In this example we have 3 concurrent requests — max_workers=3

import requests from concurrent.futures import ThreadPoolExecutor urls = ['https://example.com/', 'https://httpbin.org/', 'https://example.com/test_page'] def get_url(url): return requests.get(url) with ThreadPoolExecutor(max_workers=3) as pool: print(list(pool.map(get_url,urls))) The result of the execution:

asyncio & aiohttp — send multiple requests

If we want to send requests concurrently, you can use a library such as:

Here’s an example of how to use concurrent.futures to send multiple HTTP requests concurrently:

import aiohttp import asyncio urls = ['https://httpbin.org/ip', 'https://httpbin.org/get', 'https://httpbin.org/cookies'] * 10 async def get_url_data(url, session): r = await session.request('GET', url=f'') data = await r.json() return data async def main(urls): async with aiohttp.ClientSession() as session: tasks = [] for url in urls: tasks.append(get_url_data(url=url, session=session)) results = await asyncio.gather(*tasks, return_exceptions=True) return results data = asyncio.run(main(urls)) for item in data: print(item) Sending the multiple requests will result into simultaneous output:

, 'headers': , 'origin': '123.123.123.123', 'url': 'https://httpbin.org/get'> > - create a list of URLs that we want to send requests to

- then create a two asynchronous method

- we extract JSON data from the result

- finally we print the results

send multiple requests — proxy + file output

Finally let’s see an example of sending multiple requests with:

- multiprocess library

- using proxy requests

- parsing JSON output

- saving the results into file:

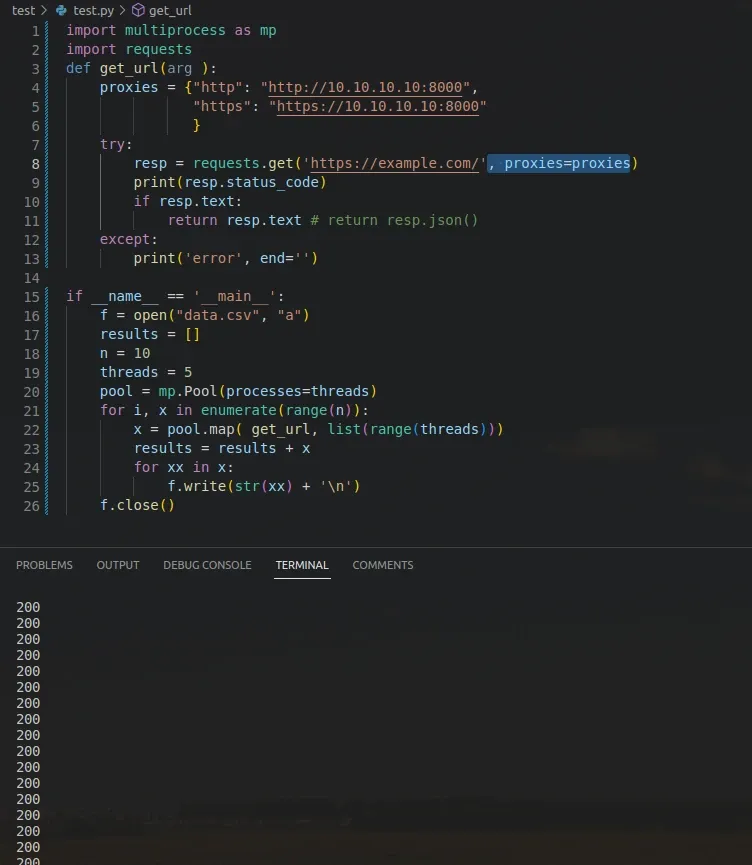

The tests will run 10 times with 5 parallel processes. You need to use valid proxy and URL address in order to get correct results:

import multi process as mp import requests def get_url(arg ): proxies = try: resp = requests.get('https://example.com/', proxies=proxies) print(resp.status_code) if resp.text: return resp.text # return resp.json() except: print('error', end='') if __name__ == '__main__': f = open("data.csv", "a") results = [] n = 10 threads = 5 pool = mp.Pool(processes=threads) for i, x in enumerate(range(n)): x = pool.map( get_url, list(range(threads))) results = results + x for xx in x: f.write(str(xx) + '\n') f.close() Don’t use these examples in Jupyter Notebook or JupyterLab. Parallel execution may not work as expected!

The result is visible on the image below:

summary

In this article we saw how to send multiple HTTP requests in Python with libraries like:

We saw multiple examples with JSON data, proxy usage and file writing. We covered sending requests with libraries — requests and aiohttp

For more parallel examples in Python you can read:

By using SoftHints — Python, Linux, Pandas , you agree to our Cookie Policy.

Multi-threading API Requests in Python

When making hundred or thousands of API calls things can quickly get really slow in a single threaded application.

No matter how well your own code runs you’ll be limited by network latency and response time of the remote server. Making 10 calls with a 1 second response is maybe OK but now try 1000. Not so fun.

For a recent project I needed to make almost 50.000 API calls and the script was taking hours to complete. Now looking into multi-threading applications was no longer an option, it was required.

Classic Single Threaded Code

This is the boilerplate way to make an API request and save the contents as a file. The code simply loops through a list of URLs to call and downloads each one as a JSON file giving it a unique name.

import requests import uuid url_list = ['url1', 'url2'] for url in url_list: html = requests.get(url, stream=True) file_name = uuid.uuid1() open(f'.json', 'wb').write(html.content)

Multi Threaded Code

For comparison here is the same code running multi-threaded.

import requests import uuid from concurrent.futures import ThreadPoolExecutor, as_completed url_list = ['url1', 'url2'] def download_file(url, file_name): try: html = requests.get(url, stream=True) open(f'.json', 'wb').write(html.content) return html.status_code except requests.exceptions.RequestException as e: return e def runner(): threads= [] with ThreadPoolExecutor(max_workers=20) as executor: for url in url_list: file_name = uuid.uuid1() threads.append(executor.submit(download_file, url, file_name)) for task in as_completed(threads): print(task.result()) runner()

Breaking it down you first need to import ThreadPoolExecutor and as_completed from concurrent.futures. This is a built-in python library so no need to install anything here.

Next you must encapsulate you downloading code into its own function. The function download_file does this in the above example, this is called with the URL to download and a file name to use when saving the downloaded contents.

The main part comes in the runner() function. First create an empty list of threads.

Then create your pool of threads with your chosen number of workers (threads). This number is up to you but for most APIs I would not go crazy here otherwise you risk being blocked by the server. For me 10 to 20 works well.

with ThreadPoolExecutor(max_workers=20) as executor:

Next loop through your URL list and append a new thread as shown below. Here it’s clear why you need to encapsulate your download code into a function since the first argument is the name of the function you wish to run in a new thread. The arguments after that are the arguments being passed to the download function.

You can think of this as making multiple copies or forks of the downloading function and then running each one in parallel in different threads.

threads.append(executor.submit(download_file, url, file_name)

Finally we print out the return value from each thread (in this case we returned the status code fro the API call)

for task in as_completed(processes): print(task.result())

That’s it. Easy to implement and gives a huge speedup. In my case I ended up with this performance.

Time taken: 1357 seconds (22 minutes)

49980 files

1.03 Gb

This works out at almost 37 files a second or 2209 files per minute. This is at least a 10x improvement in performance.