- Run a Python script as a subprocess with the multiprocessing module

- The worker script

- The main script

- Parallelization in practice

- Further reading

- Launch Script From Another Python Script

- Using subprocess.run

- Launch Script

- Execute Shell Command

- Using subprocess.Popen

- Process Interaction

- Changing stdout and stderr

- Run another Programming Language

- Related Posts

Run a Python script as a subprocess with the multiprocessing module

Suppose you have a Python script worker.py performing some long computation. Also suppose you need to perform these computations several times for different input data. If all the computations are independent from each other, one way to speed them up is to use Python’s multiprocessing module.

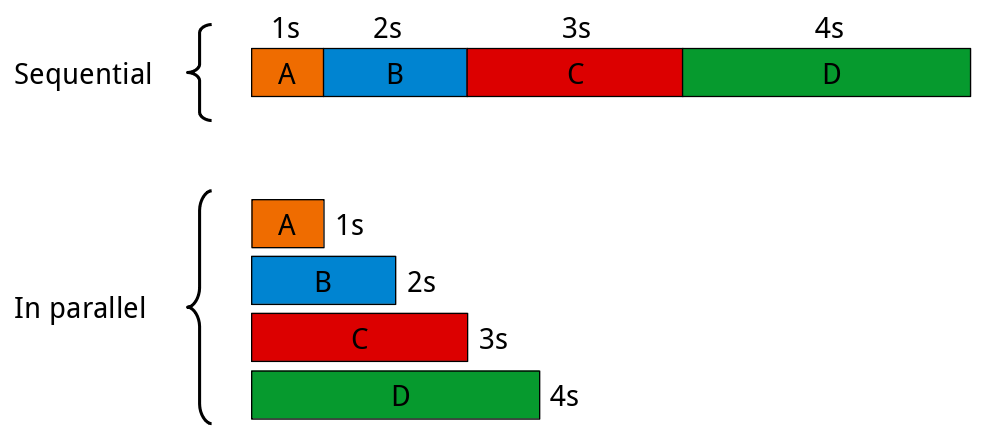

This comes down to the difference between sequential and parallel execution. Suppose you have the tasks A, B, C and D, requiring 1, 2, 3 and 4 seconds, respectively, to complete. When ran sequentially, meaning one after the other, you’d need 10 seconds in order for all tasks to complete, whereas running them in parallel (if you have 4 available CPU cores) would take 4 seconds, give or take, because some overhead does exist.

Before we continue, it is worth emphasizing that what is meant by parallel execution is each task is handled by a separate CPU, and that these tasks are ran at the same time. So in the figure above, when tasks A, B, C and D are run in parallel, each of them is ran on a different CPU.

The worker script

Let us see a very simple example for worker.py ; remember that it performs long computations:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18

import sys import time def do_work(n): time.sleep(n) print('I just did some hard work for <>s!'.format(n)) if __name__ == '__main__': if len(sys.argv) != 2: print('Please provide one integer argument', file=sys.stderr) exit(1) try: seconds = int(sys.argv[1]) do_work(seconds) except Exception as e: print(e)

worker.py fails if it does not receive a command-line argument that can be converted to an integer. It then calls the do_work() method with the input argument converted to an integer. In turn, do_work() performs some hard work (sleeping for the specified number of seconds) and then outputs a message:

$ python worker.py 2 I just did some hard work for 2s!

(Just in case you were wondering, if do_work() is called with a negative integer, then it is the sleep() function that complains about it.)

The main script

Let us now see how to run worker.py from within another Python script. We will create a file main.py that creates four tasks. As shown in the figure above, the tasks take 1, 2, 3 and 4 seconds to finish, respectively. Each task consists in running worker.py with a different sleep length:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27

import subprocess import multiprocessing as mp from tqdm import tqdm NUMBER_OF_TASKS = 4 progress_bar = tqdm(total=NUMBER_OF_TASKS) def work(sec_sleep): command = ['python', 'worker.py', sec_sleep] subprocess.call(command) def update_progress_bar(_): progress_bar.update() if __name__ == '__main__': pool = mp.Pool(NUMBER_OF_TASKS) for seconds in [str(x) for x in range(1, NUMBER_OF_TASKS + 1)]: pool.apply_async(work, (seconds,), callback=update_progress_bar) pool.close() pool.join()

The tasks are ran in parallel using NUMBER_OF_TASKS (4) processes in a multiprocessing pool (lines 20-26). When we refer to the tasks being ran in parallel, we mean that the apply_async() method is applied to every task (line 23). The first argument to apply_async() is the method to execute asynchronously ( work() ), the second one is the argument for work() ( seconds ), and the third one is a callback, our update_progress_bar() function.

The work() method (lines 10-12) calls our previous script worker.py with the specified number of seconds. This is done through the Python subprocess module.

As for tqdm , it is a handy little package that displays a progress bar for the number of items in an iteration. It can be installed through pip , conda or snap .

Parallelization in practice

Here is the output of our main.py script:

As you can see, the four tasks finished in about 4 seconds, meaning that the execution of the worker.py script has successfully been parallelized.

The next post Multiprocessing in Python with shared resources iterates on what we have just seen in order to show how we can parallelize external Python scripts that need to access the same shared resource.

Further reading

- subprocess (Python documentation)

- multiprocessing (Python documentation)

- Parallel processing in Python (Frank Hofmann on stackabuse)

- multiprocessing – Manage processes like threads (Doug Hellmann on Python Module of the Week)

Launch Script From Another Python Script

subprocess module in Python allows you to spawn new processes, connect to their input/output/error pipes, and obtain their return codes. This module can used for launching a script and/or executable from another python script.

Below script count for 10 and then exit with status success. We will use this script for demonstrating, how to launch this script from other script.

# File : count.py import time import sys def main(): loop_count = 0 while True: print('.', end='') loop_count += 1 if loop_count > 10: break time.sleep(1) print('END') sys.exit(0) if __name__ == '__main__': main() Using subprocess.run

Below is the syntax of run() command

subprocess.run(args, *, stdin=None, input=None, stdout=None, stderr=None, capture_output=False, shell=False, cwd=None, timeout=None, check=False, encoding=None, errors=None, text=None, env=None, universal_newlines=None, **other_popen_kwargs)

It run the command described by args. Wait for command to complete, then return a CompletedProcess instance.

- The input argument is passed to Popen.communicate() and thus to the subprocess’s stdin. If used it must be a byte sequence, or a string if encoding or errors is specified or text is true. When used, the internal Popen object is automatically created with stdin=PIPE, and the stdin argument may not be used as well.

- If capture_output is true, stdout and stderr will be captured.

- The timeout argument is passed to Popen.communicate(). If the timeout expires, the child process will be killed and waited for. The TimeoutExpired exception will be re-raised after the child process has terminated.

- If check is true, and the process exits with a non-zero exit code, a CalledProcessError exception will be raised. Attributes of that exception hold the arguments, the exit code, and stdout and stderr if they were captured.

- If encoding or errors are specified, or text is true, file objects for stdin, stdout and stderr are opened in text mode using the specified encoding and errors or the io.TextIOWrapper default. The universal_newlines argument is equivalent to text and is provided for backwards compatibility. By default, file objects are opened in binary mode.

- If env is not None, it must be a mapping that defines the environment variables for the new process; these are used instead of the default behavior of inheriting the current process’ environment. It is passed directly to Popen.

Launch Script

Below script launch a process to execute the python script (count.py), and wait until the process to complete.

import subprocess p = subprocess.run('python count.py', shell=True) # or #p = subprocess.run(['python', 'count.py']) print('returncode', p.returncode) print('EXIT') Execute Shell Command

It can also be used to executing shell commands. It gives us the flexibility to suppress the output of shell commands or chain inputs and outputs of various commands together.

import subprocess list_files = subprocess.run(["ls", "-l"]) print("The exit code was: %d" % list_files.returncode) Below example shows how to pass input to the command.

import subprocess useless_cat_call = subprocess.run(["cat"], stdout=subprocess.PIPE, text=True, input="cat input") print(useless_cat_call.stdout) # cat input

Using subprocess.Popen

Below is the syntax of Popen.

class subprocess.Popen(args, bufsize=- 1, executable=None, stdin=None, stdout=None, stderr=None, preexec_fn=None, close_fds=True, shell=False, cwd=None, env=None, universal_newlines=None, startupinfo=None, creationflags=0, restore_signals=True, start_new_session=False, pass_fds=(), *, group=None, extra_groups=None, user=None, umask=- 1, encoding=None, errors=None, text=None, pipesize=- 1)

Execute a child program in a new process. The arguments to Popen are as follows.

- args should be a sequence of program arguments or else a single string or path-like object. By default, the program to execute is the first item in args if args is a sequence. If args is a string, the interpretation is platform-dependent.

- The shell argument (which defaults to False) specifies whether to use the shell as the program to execute. If shell is True, it is recommended to pass args as a string rather than as a sequence.

- The executable argument specifies a replacement program to execute. It is very seldom needed. When shell=False, executable replaces the program to execute specified by args.

- stdin, stdout and stderr specify the executed program’s standard input, standard output and standard error file handles, respectively. Valid values are PIPE, DEVNULL, an existing file descriptor (a positive integer), an existing file object, and None. PIPE indicates that a new pipe to the child should be created. DEVNULL indicates that the special file os.devnull will be used. With the default settings of None, no redirection will occur; the child’s file handles will be inherited from the parent. Additionally, stderr can be STDOUT, which indicates that the stderr data from the applications should be captured into the same file handle as for stdout.

Below example launch a process to execute the python script, and does not wait for the script to complete.

import subprocess p = subprocess.Popen(['python3', 'count.py']) print('pid', p.pid) print('EXIT') # Output # pid 28730 # EXIT # . END Process Interaction

Below script is addon version of the above script. It capture output and error of the called script. It also wait for launched process to finish. Finally it displays the output and error code.

import subprocess p = subprocess.Popen(['python3', 'count.py'], stdout=subprocess.PIPE, stderr=subprocess.PIPE) out, err = p.communicate() print('Output : ', out) print('Error :', err) print('returncode', p.returncode) Popen.communicate(input=None, timeout=None) interact with process. It send data to stdin, read data from stdout and stderr, until end-of-file is reached. It also wait for process to terminate and set the returncode attribute. Note that if you want to send data to the process’s stdin, you need to create the Popen object with stdin=PIPE. Similarly, to get anything other than None in the result tuple, you need to give stdout=PIPE and/or stderr=PIPE too.

If the process does not terminate after timeout seconds, a TimeoutExpired exception will be raised. Catching this exception and retrying communication will not lose any output. The child process is not killed if the timeout expires, so in order to cleanup properly a well-behaved application should kill the child process and finish communication.

Changing stdout and stderr

Output and error can also be written to file as shown in below example.

with open("test.log","wb") as out, open("test-error.log","wb") as err: p = subprocess.Popen(['python', 'test.py'], stdout=out, stderr=err) Run another Programming Language

We can run other programming languages with python and get the output from those files. For instance, let’s create a hello world program in C++. In order to execute the following file, you’ll need to install C++ compilers.

Below script executes the above C++ file. The script first scans all the C++ files in the current working directory and executes one by one.

from glob import glob import subprocess # Gets files with each extension cpp_files = glob('*.cpp') for file in cpp_files: process = subprocess.run(f'g++ -o out; ./out', shell=True, capture_output=True, text=True) output = process.stdout.strip() + '\nExecuting by Python' print(output)