- How to Get href of Element using BeautifulSoup [Easily]

- Get the href attribute of a tag

- Get the href attribute of multi tags

- Related Tutorials:

- Recent Tutorials:

- A Step-by-Step Guide to Fetching the URL from the ‘href’ attribute using BeautifulSoup

- Prerequisite: Install and Import requests and BeautifulSoup

- Find the href entries from a webpage

- Using soup.find_all()

- Using SoupStrainer class

- Fetch the value of href attribute

- Using tag[‘href’]

- Using tag.get(‘href’)

- Real-Time Examples

- Example 1: Fetch all the URLs from the webpage.

- Example 2: Fetch all URLs based on some condition

- Example 3: Fetch the URLs based on the value of different attributes

- Conclusion

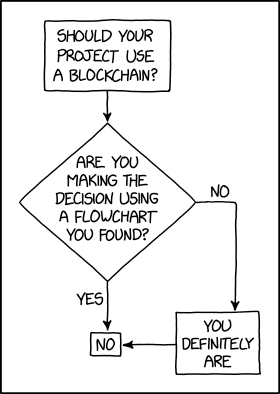

- Programmer Humor – Blockchain

How to Get href of Element using BeautifulSoup [Easily]

To get the href attribute of tag, we need to use the following syntax:

Get the href attribute of a tag

In the following example, we’ll use find() function to find tag and [‘href’] to print the href attribute.

Python string ''' soup = BeautifulSoup(html, 'html.parser') # 👉️ Parsing a_tag = soup.find('a', href=True) # 👉️ Find tag that have a href attr print(a_tag['href']) # 👉️ Print href href=True: the tags that have a href attribute.

Get the href attribute of multi tags

To get the href of multi tags, we need to use findall() function to find all tags and [‘href’] to print the href attribute. However, let’s see an example.

Python string Python variable Python list Python set ''' soup = BeautifulSoup(html, 'html.parser') # 👉️ Parsing a_tags = soup.find_all('a', href=True) # 👉️ Find all tags that have a href attr # 👇 Loop over the results for tag in a_tags: print(tag['href']) # 👉️ Print href Remember, when you want to get any attribute of a tag, use the following syntax:

You can visit beautifulsoup attribute to learn more about the BeautifulSoup attribute. Also, for more BeautifulSoup topics, scroll down you will find it.

Related Tutorials:

Recent Tutorials:

A Step-by-Step Guide to Fetching the URL from the ‘href’ attribute using BeautifulSoup

In almost all web scraping projects, fetching the URLs from the href attribute is a common task.

In today’s article, let’s learn different ways of fetching the URL from the href attribute using Beautiful Soup.

To fetch the URL, we have to first find all the anchor tags, or href s, on the webpage. Then fetch the value of the href attribute.

Two ways to find all the anchor tags or href entries on the webpage are:

Once all the href entries are found, we fetch the values using one of the following methods:

Prerequisite: Install and Import requests and BeautifulSoup

Throughout the article, we will use the requests module to access the webpage and BeautifulSoup for parsing and pulling the data from the HTML file.

To install requests on your system, open your terminal window and enter the below command:

More information here:

To install Beautiful Soup in your system, open your terminal window and enter the below command:

To install Beautiful Soup, open the terminal window and enter the below command:

import requests from bs4 import BeautifulSoup

More information here:

Find the href entries from a webpage

The href entries are always present within the anchor tag ( tag). So, the first task is to find all the tags within the webpage.

Using soup.find_all()

Soup represents the parsed file. The method soup.find_all() gives back all the tags and strings that match the criteria.

import requests from bs4 import BeautifulSoup url = "https://www.wikipedia.org/" # retrieve the data from URL response = requests.get(url) # parse the contents of the webpage soup = BeautifulSoup(response.text, 'html.parser') # filter all the tags from the parsed document for tag in soup.find_all('a'): print(tag) English 6 383 000+ articles . . . Creative Commons Attribution-ShareAlike License Terms of Use Privacy Policy Using SoupStrainer class

We can also use the SoupStrainer class. To use it, we have to first import it into the program using the below command.

from bs4 import SoupStrainer

Now, you can opt to parse only the required attributes using the SoupStrainer class as shown below.

import requests from bs4 import BeautifulSoup, SoupStrainer url = "https://www.wikipedia.org/" # retrieve the data from URL response = requests.get(url) # parse-only the tags from the webpage soup = BeautifulSoup(response.text, 'html.parser', parse_only=SoupStrainer("a")) for tag in soup: print(tag) English 6 383 000+ articles . . . Creative Commons Attribution-ShareAlike License Terms of Use Privacy Policy Fetch the value of href attribute

Once we have fetched the required tags, we can retrieve the value of the href attribute.

All the attributes and their values are stored in the form of a dictionary. Refer to the below:

sample_string="""Elsie""" soup= BeautifulSoup(sample_string,'html.parser') atag=soup.find_all('a')[0] print(atag) print(atag.attrs) Using tag[‘href’]

As seen in the output, the attributes and their values are stored in the form of a dictionary.

To access the value of the href attribute, just say

Now, let’s tweak the above program to print the href values.

sample_string="""Elsie""" soup= BeautifulSoup(sample_string,'html.parser') atag=soup.find_all('a')[0] print(atag['href']) Using tag.get(‘href’)

Alternatively, we can also use the get() method on the dictionary object to retrieve the value of ‘href’ as shown below.

sample_string = """Elsie""" soup = BeautifulSoup(sample_string,'html.parser') atag = soup.find_all('a')[0] print(atag.get('href')) Real-Time Examples

Now that we know how to fetch the value of the href attribute, let’s look at some of the real-time use cases.

Example 1: Fetch all the URLs from the webpage.

Let’s scrape the Wikipedia main page to find all the href entries.

from bs4 import BeautifulSoup import requests url = "https://www.wikipedia.org/" # retrieve the data from URL response = requests.get(url) if response.status_code ==200: soup=BeautifulSoup(response.text, 'html.parser') for tag in soup.find_all(href=True): print(tag['href'])

//cu.wikipedia.org/ //ss.wikipedia.org/ //din.wikipedia.org/ //chr.wikipedia.org/ . . . . //www.wikisource.org/ //species.wikimedia.org/ //meta.wikimedia.org/ https://creativecommons.org/licenses/by-sa/3.0/ https://meta.wikimedia.org/wiki/Terms_of_use https://meta.wikimedia.org/wiki/Privacy_policy

As you can see, all the href entries are printed.

Example 2: Fetch all URLs based on some condition

Let’s say we need to find only the outbound links. From the output, we can notice that most of the inbound links do not have «https://» in the link.

Thus, we can use the regular expression ( «^https://» ) to match the URLs that start with «https://» as shown below.

Also, check to ensure nothing with ‘ wikipedia ’ in the domain is in the result.

from bs4 import BeautifulSoup import requests import re url = "https://www.wikipedia.org/" # retrieve the data from URL response = requests.get(url) if response.status_code ==200: soup=BeautifulSoup(response.text, 'html.parser') for tag in soup.find_all(href=re.compile("^https://")): if 'wikipedia' in tag['href']: continue else: print(tag['href']) https://meta.wikimedia.org/wiki/Special:MyLanguage/List_of_Wikipedias https://donate.wikimedia.org/?utm_medium=portal&utm_campaign=portalFooter&utm_source=portalFooter . . . https://meta.wikimedia.org/wiki/Terms_of_use https://meta.wikimedia.org/wiki/Privacy_policy

Example 3: Fetch the URLs based on the value of different attributes

Consider a file as shown below:

Let’s say we need to fetch the URL from the class=sister and with id=link2 . We can do that by specifying the condition as shown below.

from bs4 import BeautifulSoup #open the html file. with open("sample.html") as f: #parse the contents of the html file soup=BeautifulSoup(f,'html.parser') # find the tags with matching criteria for tag in soup.find_all('a',): print(tag['href']) Conclusion

That brings us to the end of this tutorial. In this short tutorial, we have learned how to fetch the value of the href attribute within the HTML tag. We hope this article has been informative. Thanks for reading.

Programmer Humor – Blockchain

Be on the Right Side of Change 🚀

- The world is changing exponentially. Disruptive technologies such as AI, crypto, and automation eliminate entire industries. 🤖

- Do you feel uncertain and afraid of being replaced by machines, leaving you without money, purpose, or value? Fear not! There a way to not merely survive but thrive in this new world!

- Finxter is here to help you stay ahead of the curve, so you can keep winning as paradigms shift.

Learning Resources 🧑💻

⭐ Boost your skills. Join our free email academy with daily emails teaching exponential with 1000+ tutorials on AI, data science, Python, freelancing, and Blockchain development!

Join the Finxter Academy and unlock access to premium courses 👑 to certify your skills in exponential technologies and programming.

New Finxter Tutorials:

Finxter Categories: