- Python Read Excel and Insert data to SQL

- Import csv files into Pandas Dataframe

- Import first csv into a Dataframe:

- Import second csv into another Dataframe

- Merge Dataframes:

- Saved searches

- Use saved searches to filter your results more quickly

- DocMorg/testworkhse

- Name already in use

- Sign In Required

- Launching GitHub Desktop

- Launching GitHub Desktop

- Launching Xcode

- Launching Visual Studio Code

- Latest commit

- Git stats

- Files

- README.md

- About

- Как правильно импортировать таблицу excel в бд postgre?

Python Read Excel and Insert data to SQL

Often we encounter this challenge to deal with multiple csv files and we start looking out for options to import these files to MySQL or PostgresSQL Databases. For many of us this possess a challenge because we don’t know a good tool or a way to merge these csv files into one and import it to the SQL table. From my experience I learnt there is no requirement for any additional tool or library or install any packages like csvtoolkit to achieve this. if you have Python, Pandas installed on your computer which I assume will be since you came to this blog searching for a pythonic way of doing it.

In this post, I am going to assume that you have MYSQL/PostgresSQL setups done on your computer and the Table that you want to populate with the csv data is already created and have all the required columns same as the columns present in the csv files.

if Pandas not installed on your computer then install it using pip:

I have two csv files which I wanted to merge.These files contains Red and White Wines details of different brands and their respective acidity,sugar,chlorides,pH,alcohol content value

a) Red_Wine.csv : Total Rows: 1599

b) White_Wine.csv : Total Rows: 4898

Note both the files have same no. of columns and headers which is as follows:

fixed acidity’, ‘volatile acidity’, ‘citric acid’, ‘residual sugar’,’chlorides’, ‘free sulfur dioxide’, ‘total sulfur dioxide’, ‘density’,’pH’, ‘sulphates’, ‘alcohol’, ‘quality’

Import Pandas

Import csv files into Pandas Dataframe

Import first csv into a Dataframe:

We are using these two arguments of Pandas read_csv function, First argument is the path of the file where first csv is located and second argument is for the value separators in the file. In my case it is a semi-colon “;” but for most of the csv files it is comma ‘,’ which is a default value of this argument.

df=pd.read_csv("C:/user/vbabu/wine_csv/winequality-red.csv",sep=';') Import second csv into another Dataframe

df1=pd.read_csv("C:/user/vbabu/wine_csv/winequality-white.csv",sep=';') Merge Dataframes:

So we have imported the csv files into two dataframes and now it’s time to merge these two files. We will use Pandas concat function to merge these two files together

Import Merge Dataframe to MySQL and Postgres Table

Now we will push the rows of this merged dataframes into a MYSQL or Postgres Table and we have to first establish the connection with the MYSQL server using sqlalchemy.

from sqlalchemy import create_engine engine=create_engine("mysql+mysqldb://root:"+'root'+"@localhost/entityresolution") engine = create_engine("mysql+mysqldb://your_mysql_username:"+'your_mysql_password'+"@Mysql_server_hostname:your_port/Mysql_databasename") For Postgres

engine = create_engine('postgresql+psycopg2://your_mysql_username:your_mysql_password@Mysql_server_hostname:your_port/Mysql_databasename') Pandas to_sql function

we will use Pandas to_sql function which will insert these rows of merged dataframe into the MYSQL Table.

df.to_sql(name=Your_table_name_in_single_quotes, con=engine, if_exists='append',index=False) Look at the if_exists argument which is important here, there are 3 values for these arguments and if the table already exists::

fail: it’s a default value. Raise a ValueError.

replace: Drop the table before inserting new values.

append: Insert new values to the existing table.

I have chosen append since I want to insert new and fresh values to an empty Table

So you have seen how easy and fast it is to Merge and Upload the Data from CSV files to MYSQL or Postgres without the overhead of loading any tools or libraries

Updated: January 12, 2019

Saved searches

Use saved searches to filter your results more quickly

You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. Reload to refresh your session. You switched accounts on another tab or window. Reload to refresh your session.

Excel to PostgreSQL transmitter written on Python 3.7

DocMorg/testworkhse

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Name already in use

A tag already exists with the provided branch name. Many Git commands accept both tag and branch names, so creating this branch may cause unexpected behavior. Are you sure you want to create this branch?

Sign In Required

Please sign in to use Codespaces.

Launching GitHub Desktop

If nothing happens, download GitHub Desktop and try again.

Launching GitHub Desktop

If nothing happens, download GitHub Desktop and try again.

Launching Xcode

If nothing happens, download Xcode and try again.

Launching Visual Studio Code

Your codespace will open once ready.

There was a problem preparing your codespace, please try again.

Latest commit

Git stats

Files

Failed to load latest commit information.

README.md

Excel to PostgreSQL transmitter written on Python 3.7

for help type in command line:

This is the test work, which purpose is to merge data from Microsoft Excel (.xlsx format) data files to PostgreSQL database. It supports creating table with excel file on input , updating it and adding indexes . If there is an type intersection in one row during updating or creating, the whole row will be converted to string format. Names of the rows should be on the first line of the file.

To run the program type in command line:

$ python project.py excel.xlsx [-args]

where excel.xlsx — is the MS Excel file entry and -args — argument you need.

In the ‘test.py’ file are the tests made for this program.

About

Excel to PostgreSQL transmitter written on Python 3.7

Как правильно импортировать таблицу excel в бд postgre?

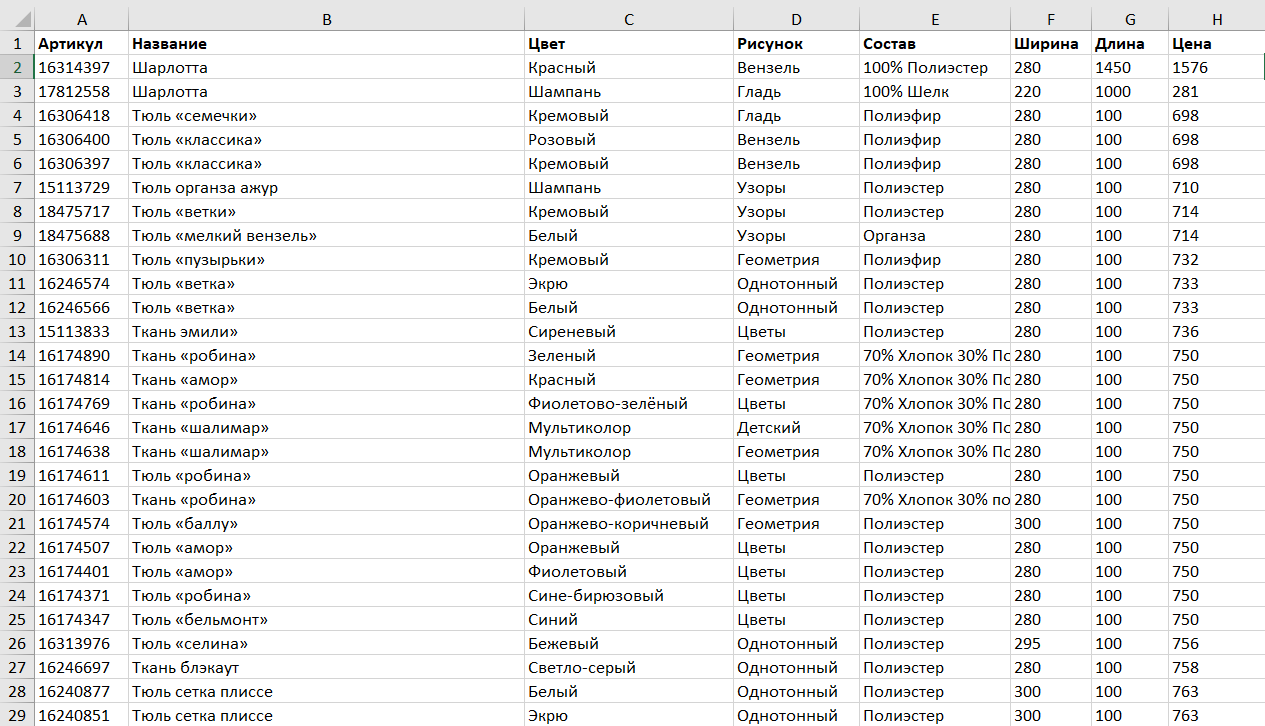

есть таблица в excel:

код на python для импорта таблицы в postgre (все типы данных в бд и excel — текст)

import openpyxl import psycopg2 conn = psycopg2.connect( host="127.0.0.1", database="educational_practice", user="postgres", password="" ) cur = conn.cursor() workbook = openpyxl.load_workbook('data.xlsx') worksheet = workbook['Ткани'] for row in worksheet.iter_rows(min_row=2, max_row=496, values_only=True): cur.execute("INSERT INTO excel.textile1 (vendor_code, name, color, drawing, composition, width, length, price) VALUES (%s, %s, %s, %s, %s, %s, %s, %s)", row) conn.commit() cur.close() conn.close()not all arguments converted during string formattingЯ бы в принципе рекомендовал использовать pandas для таких целей.

1. Читаем excel в pandas dataframe псевдокод будет такой.

import pandas as pd df =pd.read_excel(data.xlsx,sheet_name=’Ткани’)у функции read_excel много параметров, в том числе header, index_col которые возможно придется настраивать.

2. Глянул бы на него что таблица в порядке (это как фрейм запишется в базу), глянул бы на типы данных, df.dtypes что бы все типы колонок, были в том виде как их ожидает база. Если надо изменил бы их. Проверил бы есть ли пропущенные значения (nan, NA). Если есть провел бы работу с ними.

3. Затем бы отправлял в базу методом to_sql(). Псевдо код будет такой.

df.to_sql('table_name', connection, if_exists='replace')Параметр if_exists (если таблица существует) имеет также метод append просто весь фрейм добавит новыми строками, и fail тогда будет ошибка.

У pandas хорошая документация и он силен в чтениях фалов любых форматов, так что если что то не получается, это почти всегда поправимо, ну и как писать в базу тоже конфигурируется очень удобно, так что если в этом будут проблемы то тоже решается.